Image and Video Enhancement

Our work in image and video enhancement includes super-resolution without requiring paired low- and high-resolution data, joint spatial enhancement and temporal interpolation, and non-adversarial video synthesis. Below we describe some of our recent work in these problems.

Video super-resolution. Most of the existing works in supervised spatio-temporal video super-resolution rely on a large-scale external dataset consisting of paired low-resolution,low-frame rate and high-resolution, high-frame-rate videos. This is an impractical assumption in most applications. In our MM 2021 work, we present a framework called Adaptive Video Super-Resolution (Ada-VSR) which leverages external, as well as internal, information through meta-transfer learning and internal learning. Specifically, meta-learning is employed to obtain parameters, using a large-scale external dataset, that can adapt quickly to the novel condition (degradation model) of the given test video during the internal learning task, thereby exploiting external and internal information of a video for super-resolution.

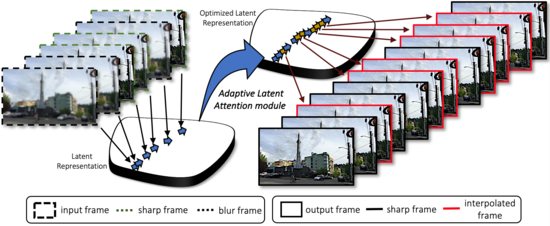

Joint enhancement and interpolation. Most of the existing works address the problem of generating high frame-rate sharp videos by separately learning the frame deblurring and frame interpolation modules. Many of these approaches have a strong prior assumption that all the input frames are blurry whereas in a real-world setting, the quality of frames varies. Moreover, such approaches are trained to perform either of the two tasks- deblurring or interpolation - in isolation, while many practical situations call for both. In our MM 2020 paper, we proposed a framework, ALANET, to address this problem. Further, most existing works in video synthesis focus on generating videos using adversarial learning. In our CVPR 2020 paper, we focused on the problem of generating videos from latent noise vectors, without any reference input frames, and proposed a non-adversarial learning framework, leading to superior quality videos compared to the existing state-of-the-art methods.

This work has been supported by NSF and Vimaan Robotics.