Vision and Language

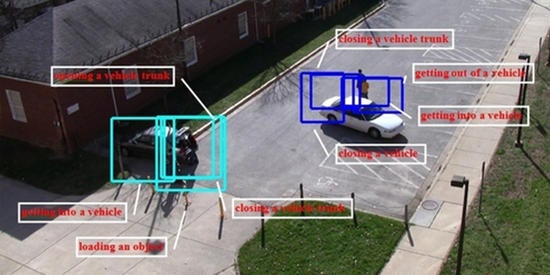

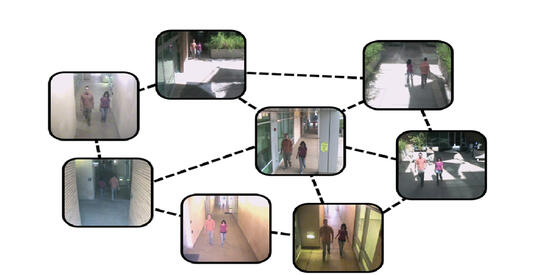

Natural data on the web consists of both visual and textual modalities. Our focus has been on developing efficient text-image/video retrieval methods on naturally occurring and imperfect data, such as that found on social media. The initial work focused on retrieving relevant video moments based on text queries with weak supervision used to learn the retrieval models [CVPR 2019]. The weak supervision could be in the form of textual captions for a video, rather than specifying where certain activities happen in the video. Building upon this, we have shown how to retrieve from a corpus of videos, rather than a single video. In our TIP 2021 paper, we showed that it is possible to choose the right video, as well as the moment in the video that was most relevant to a particular query. In our ECCV 2022 paper, we have considered localization of unseen novel events based on their semantic similarity with events seen in the training data.

The above methods, as well as most retrieval methods, assume that the text is clean and reliable, which is impractical when dealing with social media. We are currently working on developing text-image/video retrieval methods that are robust to noise in the text.

This work has been supported by NSF and ONR.