Three papers accepted to ACM-Multimedia 2020

Papers on High-Frame Rate Video Enhancement, Adversarial Knowledge Transfer and Video Fast-Forwarding accepted to ACM-MM 2020Papers on High-Frame Rate Video Enhancement, Adversarial Knowledge Transfer and Video Fast-Forwarding accepted to ACM-MM 2020.

- The paper, ALANET: Adaptive Latent Attention Network for Joint Video Deblurring and Interpolation, accepted as oral in ACM-MM 2020, proposes the Adaptive Latent Attention Network (ALANET), to synthesize sharp high frame-rate videos by jointly performing the task of both deblurring and interpolation. ALANET utilizes self-attention and cross-attention on the latent representations of the low frame-rate poor quality frame to generate high frame-rate enhanced videos.

Title: ALANET: Adaptive Latent Attention Network for Joint Video Deblurring and Interpolation, Akash Gupta, Abhishek Aich, and Amit K. Roy-Chowdhury, ACM International Conference on Multimedia (ACM-MM), 2020.

- The paper, Adversarial Knowledge Transfer from Unlabeled Data, in ACM-MM 2020, proposes a novel adversarial learning framework for transferring knowledge from internet-scale unlabeled data to improve the performance of a classifier on a given visual recognition task. The proposed adversarial learning framework aligns the feature space of the unlabeled source data with the labeled target data such that the target classifier can be used to predict pseudo labels on the source data.

Title: Adversarial Knowledge Transfer from Unlabeled Data. Akash Gupta*, Rameswar Panda*, Sujoy Paul, Jianming Zhang, and Amit K. Roy-Chowdhury, ACM International Conference on Multimedia (ACM-MM), 2020. (* joint first authors)

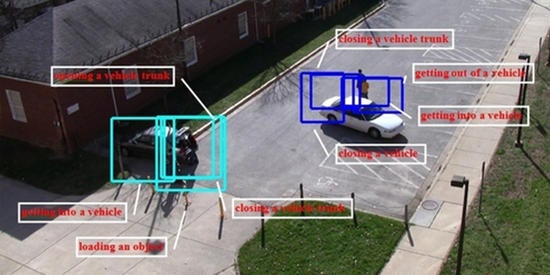

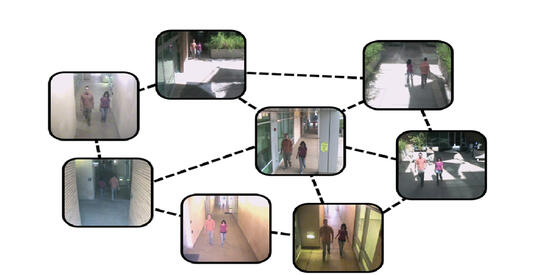

- Additionally, Amit Roy-Chowdhury is a co-author on the paper, Distributed Multi-agent Video Fast-forwarding, accepted as oral in ACM-MM 2020, with collaborators at Northwestern University. This paper presents a consensus-based distributed multi-agent video fast-forwarding framework, named DMVF, that fast-forwards multi-view video streams collaboratively and adaptively. In our framework, each camera view is addressed by a reinforcement learning-based fast-forwarding agent, which periodically chooses from multiple strategies to selectively process video frames and transmit the selected frames at adjustable paces. During every adaptation period, each agent communicates with a number of neighboring agents and decides their strategies for the next period.

Title: Distributed Multi-agent Video Fast-forwarding., Shuyue Lan, Zhilu Wang, Amit K. Roy-Chowdhury, Ermin Wei, and Qi Zhu, ACM International Conference on Multimedia (ACM-MM), 2020.