Situational Awareness Under Resource Constraints

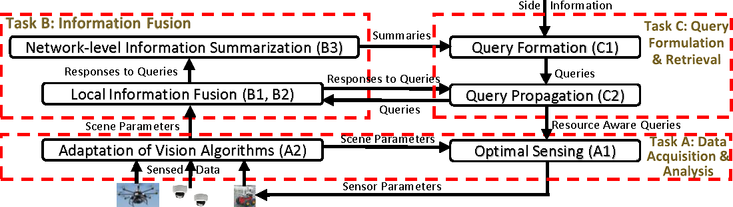

The goal of this project is to facilitate the timely retrieval of dynamic situational awareness information from field deployed information-rich sensors by an operational center in disaster recovery or search and rescue missions, which are typically characterized by resource-constrained uncertain environments. Towards realizing a networked system that facilitates the retrieval of time-critical, operation-relevant situational awareness this project will address the following (non-exhaustive list) challenges: (a) How do we intelligently activate field sensors and acquire and process data to extract semantically relevant information that is easily interpreted? (b) How do we formulate expressive and effective queries that enable the near-time retrieval of the relevant situational awareness information while adhering to resource constraints? (c) How do we impose a network structure that facilitates cost-effective query propagation and response retrieval? The project encompasses the following three highly inter-related tasks:

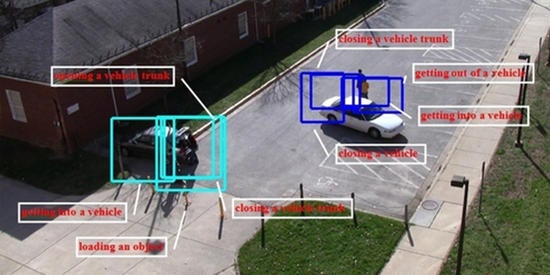

Task A: Resource-Constrained Data Acquisition and Analysis. This task looks at how to reconfigure the network and adapt video analysis in real time to meet different (sometimes conflicting) application requirements, given resource constraints.

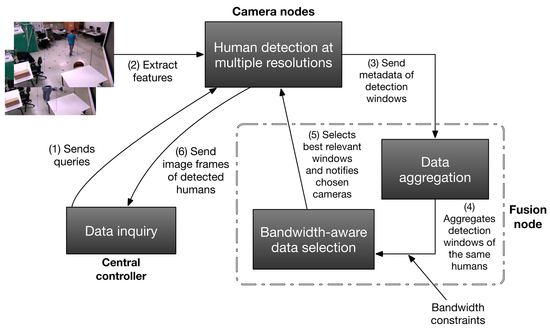

Task B: Information Fusion Under Resource Constraints. This task proposes methods to locally process and fuse the content generated, given the query needs and resource constraints. It also considers how to summarize the content received in response to the queries to facilitate further analysis at the operation center.

Task C: Cost-effective Query Formulation and Retrieval. This task will address challenges in query formulation, refinement and retrieval, including (i) prioritizing queries as per importance criteria, (ii) effective query dissemination in the field, and (iii) effective retrieval of the sensed information.

Video Analysis under Resource Constraints

Analysis of videos is known to be time-consuming and resource hungry. We are developing methods for scene understanding in video with limited resources. Specifically, we have developed methods for object detection and tracking that are aware of the resource constraints, as well as object and scene categorization methods which are computationally more efficient than many state-of-the-art methods.

-

Sample Publications

-

Frugal Following: Power Thrifty Object Detection and Tracking for Mobile Augmented Reality [Code]

K. Apicharttrisorn, X. Ran, J. Chen, S. Krishnamurthy, and A. Roy-Chowdhury, SenSys, 2019.

-

Construction of Diverse Image Datasets from Web Collections with Limited Labeling

N.C. Mithun, R. Panda, and A. Roy-Chowdhury., IEEE Trans. on Circuits and Systems for Video Technology, 2019.

-

Learning Long-Term Invariant Features for Vision-Based Localization

N.C. Mithun, C. Simons, R. Casey, S. Hilligardt and A. Roy-Chowdhury, IEEE Winter Conf. on Applications of Computer Vision (WACV), 2018

-

Exploiting Transitivity for Learning Person Re-identification Models on a Budget

S. Roy, S. Paul, N. Young and A. Roy-Chowdhury, IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2018.

-

Multi-View Surveillance Video Summarization via Joint Embedding and Sparse Optimization

R. Panda and A. Roy-Chowdhury, IEEE Trans. on Multimedia (TMM), 2017.

-

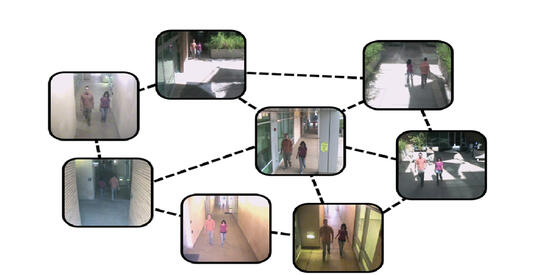

Unsupervised Adaptive Re-identification in Open World Dynamic Camera Networks

R. Panda, A. H. Bhuiyan, V. Murino and A. Roy-Chowdhury, IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017 (Spotlight).

-

Weakly Supervised Summarization of Web Videos

R. Panda, A. Das, Z. Wu, J. Ernst and A. Roy-Chowdhury, International Conference on Computer Vision (ICCV), 2017.

-

Generating Diverse Image Datasets with Limited Labeling

N. C. Mithun, R. Panda, A. Roy-Chowdhury, ACM International Conf. on Multimedia (ACM-MM), 2016.

-

Opportunistic Image Acquisition of Individual and Group Activities in a Distributed Camera Network

C. Ding, J. H. Bappy, J. A. Farrell, A. Roy-Chowdhury, IEEE Transactions on Circuits and Systems for Video Technology, 2016.

-

Managing Redundant Content in Bandwidth Constrained Wireless Networks

T. Dao, S. Krishnamurthy, A. Roy-Chowdhury, T. LaPorta, International Conf. on Emerging Networking EXperiments and Technologies, 2014.

-

Situational Awareness with Network Constraints

In this part of the project, we explore how network constraints affect the ability to derive robust situational awareness in a scene imaged by a network of sensors, e.g., cameras, or where the sensors need to communicate with a central server.

-

Sample Publications

-

Exploiting Global Camera Network Constraints for Unsupervised Video Person Re-identification

X. Wang, R. Panda, M. Liu, Y. Wang, A. Roy-Chowdhury, IEEE Trans. on Circuits and Systems for Video Technology (T-CSVT), 2020.

-

Edge assisted Detection and Summarization of Key Global Events from Distributed Crowd-sensed Data

Fahim, A., Neupane, A,. Papalexakis, E., Kaplan, L., Krishnamurthy, S.V., & Abdelzaher, T., IEEE International Conference of Cloud Engineering, 2019

-

Accurate and Timely Situation Awareness Retrieval from a Bandwidth Constrained Camera Network

T. Dao, A. Roy Chowdhury, N. Nasrabadi, S. Krishnamurthy, P. Mohapatra, and L.Kaplan, IEEE Intl. Conf. on Mobile Ad-Hoc and Sensor Systems, 2017.

-

Energy Efficient Object Detection in Camera Sensor Networks

T. Dao, K. Khalil, A. Roy Chowdhury, S. Krishnamurthy and L. Kaplan, IEEE Intl. Conf. on Distributed Computing, 2017.

-

Managing Redundant Content in Bandwidth Constrained Wireless Networks

T. Dao, A. K. Roy-Chowdhury, H. V. Madhyastha, S. V. Krishnamurthy, and T. LaPorta, IEEE/ACM Transactions on Networking, 2016.

-

Multimodal Analysis

Please see Joint Image-Text Analysis for more details.

Multi-sensor Networks

Please see the Wide Area Scene Analysis for more details.